In a recent demonstration, developers showcased an advanced pipeline for scalable inference using vLLM (vLLM stands for virtualized Large Language Models) combined with AWS Deep Learning Containers (DLC), aiming to significantly improve AI deployment efficiency and tackle the cold-start problem in recommendation systems.

The approach involves multiple stages of AI integration designed to optimize the entire recommendation workflow:

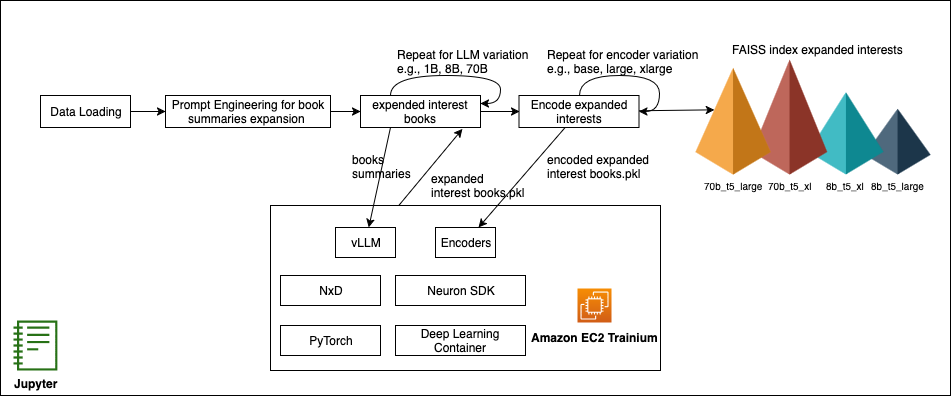

1. **Structured Prompting and Interest Expansion:** First, structured prompts are generated to explore user interests or content categorizations systematically. These prompts elicit rich responses from large language models, helping to build detailed profiles or sets of interest representations.

2. **Embedding Generation:** These expanded responses are then encoded into vector embeddings using encoder models. The embeddings serve as high-dimensional representations of the generated content or user interest, suitable for further computational processing.

3. **Candidate Retrieval with FAISS:** Using FAISS (Facebook AI Similarity Search), an efficient similarity search library, the system retrieves closely related candidates from a pre-indexed dataset. This enables the identification of content or items highly similar to the user’s inferred preferences.

4. **Result Validation:** Following retrieval, a validation step is incorporated to ensure the groundedness of the recommendations. This phase may filter out irrelevant or hallucinated content and verify logical or factual soundness.

5. **Scientific Benchmarking of Encoder-LLM Pairings:** Addressing the cold-start challenge—where recommendation models struggle without prior user data—the process treats this issue as an experimental setup. Various combinations of large language models and encoders are tested, using standard recommendation system metrics (like precision, recall, and NDCG). The goal is to continuously refine the pipeline to identify configurations that yield optimum return on investment (ROI).

By packaging and deploying this entire system using AWS DLCs, the team leverages cloud-optimized Docker images that simplify the setup and scaling of ML workloads. These containers are pre-built with frameworks like PyTorch and TensorFlow, among others, ensuring compatibility and performance optimization on AWS infrastructure.

This unified pipeline streamlines every phase of generative and retrieval-based AI deployments, making it an effective solution for organizations aiming to personalize user experiences at scale—particularly when dealing with new users or content for which limited historical interaction data exists.

In summary, the integration of vLLM with AWS services and strategic model experimentation forms a robust foundation for scalable, accurate, and validated AI recommendation systems.

Source: https:// – Courtesy of the original publisher.